The reason to fear chatbots isn’t their intelligence, but rather their potential for misuse

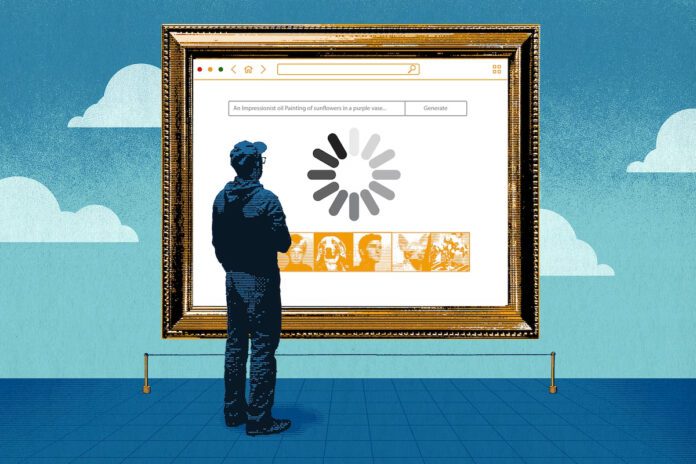

For the past six months, powerful new artificial intelligence tools have been proliferating at a pace that’s hard to process. From chatbots holding humanlike conversations to coding bots spinning up software to image generators spitting out viral pictures of the Pope in a puffer coat, generative AI is suddenly everywhere all at once. And it keeps getting more powerful.

Last week, the backlash hit. Thousands of technologists and academics, headlined by billionaire Elon Musk, signed an open letter warning of “profound risks to humanity” and calling for a six-month pause in the development of AI language models. An AI research nonprofit filed a complaint asking the Federal Trade Commission to investigate OpenAI, the San Francisco start-up behind ChatGPT, and halt further commercial releases of its GPT-4 software. Authorities in Italy moved to block ChatGPT altogether, alleging data privacy violations.

The urge to stand athwart AI, yelling “stop!”, might be understandable. AI applications that may have seemed implausible or even unfathomable a few years ago are rapidly infiltrating social media, schools, workplaces, and even politics. Some people look at the dizzying trajectory of change and see a line that leads to robot overlords and the downfall of humanity.

The good news is that both the hype and fears of all-powerful AI are likely overblown. Impressive as they may be, Google Bard and Microsoft Bing are a long way from Skynet.

The bad news is that anxiety at the pace of change also might be warranted — not because AI will outsmart humans, but because humans are already using AI to outsmart, exploit, and shortchange each other in ways that existing institutions aren’t prepared for. And the more AI is regarded as powerful, the greater the risk people and corporations will entrust it with tasks that it’s ill-equipped to take on.

For a road map to the impact of AI in the foreseeable future, put aside the prophecies of doom and look instead to a pair of reports issued last week — one by Goldman Sachs on the technology’s economic and labor effects and another by Europol on its potential for criminal misuse.

From an economic standpoint, the latest AI wave is about automating tasks that were once done exclusively by humans. Like the power loom, the mechanized assembly line and the ATM, generative AI offers the promise of performing certain types of work more cheaply and efficiently than humans can accomplish.

But more cheaply and efficiently doesn’t always mean better, as anyone who has dealt with a grocery self-checkout machine, automated phone answering system or customer service chatbot can attest. And what sets generative AI apart from past waves of automation is that it can mimic humans, even pass as human in certain contexts. That will both enable widespread deception and tempt employers to treat humans as replaceable by AI — even when they aren’t.

The research analysis by Goldman Sachs estimated that generative AI will alter some 300 million jobs worldwide and put tens of millions of people out of work — but also drive significant economic growth. Take the top-line figures with a shake of skepticism; Goldman Sachs predicted in 2016 that virtual reality headsets might become as ubiquitous as smartphones.

But what’s interesting in Goldman’s current AI analysis is its sector-by-sector breakdown of which work might be augmented by language models, and which might be entirely replaced.

The company’s researchers rate white-collar tasks on a difficulty scale from 1 to 7, with “review forms for completeness” at 1 and “make a ruling in court on a complicated motion” at 6. Setting the cutoff for tasks likely to be automated at a difficulty level of 4, they conclude that administrative support and paralegal jobs are the most likely to be automated away, while professions like management and software development stand to have their productivity accelerated.

The report strikes an optimistic tone, calculating that this generation of AI could eventually boost global GDP by 7 percent as corporations get more out of workers who master it. But it also anticipates around 7 percent of Americans will find their careers obsolete in the process, and many more will have to adapt to the technology to remain employable. In other words, even if generative AI goes right rather than wrong, the outcomes could include the dispossession of swaths of workers and the gradual replacement of human faces in the office and daily life with bots.

Meanwhile, we already have examples of companies so eager to cut corners that they’re automating tasks the AI can’t handle — like the tech site CNET autogenerating error-ridden financial articles. And when AI goes awry, the effects are likely to be felt disproportionately by the already marginalized. For all the excitement around ChatGPT and its ilk, the makers of today’s large language models haven’t solved the problem of biased data sets that have already embedded racist assumptions into AI applications such as face recognition and criminal risk-assessment algorithms. Last week brought another example of a Black man being wrongly jailed because of a faulty facial recognition match.

Then there are the cases in which generative AI will be harnessed for intentional harm. The Europol report details how generative AI could be used — and in some cases, is already being used — to help people commit crimes, from fraud and scams to hacks and cyberattacks.

For example, chatbots’ ability to generate language in the style of particular people — or even mimic their voices — could make it a potent tool in phishing scams. Language models’ proficiency at writing software scripts could democratize the production of malicious code. Their ability to provide personalized, contextual, step-by-step advice could make them an all-purpose how-to guide for criminals looking to break into a home, blackmail someone, or build a pipe bomb. And we’re already seeing how synthetic images can sow false narratives on social media, reviving fears that deepfakes could distort election campaigns.

Notably, what makes the language models vulnerable to these sorts of abuse is not just their wide-ranging smarts, but also their fundamental intellectual shortcomings. The leading chatbots are trained to clam up when they detect attempts to use them for nefarious purposes. But as Europol notes, “the safeguards preventing ChatGPT from providing potentially malicious code only work if the model understands what it is doing.” As numerous documented tricks and exploits demonstrate, self-awareness remains one of the technology’s weak points.

Given all the risks, you don’t have to buy into doomsday scenarios to see the appeal of slowing the pace of generative AI development to give society more time to adapt. OpenAI itself was founded as a nonprofiton the premise that AI could be built more responsibly without the pressure to meet quarterly earnings goals.

But OpenAI is now leading a headlong race, tech giants are axing their ethicists and, in any case, the horse may have already left the barn. As academic AI experts Sayash Kapoor and Arvind Narayanan point out in their newsletter, AI Snake Oil, the main driver of innovation in language models now is not the push toward ever-larger models, but the sprint to integrate the ones we have into all manner of apps and tools. Rather than try to contain AI, like nuclear weapons, they argue regulators should view AI tools through the lens of product safety and consumer protection.

Maybe most important in the short term is for technologists, business leaders and regulators alike to move past the panic and hype, toward a more textured understanding of what generative AI is good and bad at — and thus more circumspection in adopting it. The effects of AI, if it continues to pan out, will be disruptive no matter what. But overestimating its capabilities will make it more harmful, not less.